Hi, I'm Pete Pittawat Taveekitworachai

I’m interested in shaping model behavior to align with human preferences, domain-specific requirements, task objectives, and cultural values.

My work includes first-author papers at EMNLP 2024 and 2025, leading Typhoon T1—Southeast Asia's first open reasoning model—and investigating medical reasoning models (MRMs) with novel alignment techniques.

Current focus

Conducting frontier research in LLM Reasoning and Post-training (SFT/RFT), and investigating LLM Inference strategies.

Ship-ready builds

See the evaluation stacks and agent tooling delivered to teams.

Research notes

Read experiments, failure digs, and applied prompting patterns.

Talks & workshops

Watch practical walkthroughs from conferences and private sessions.

About Me

I'm Pete (Pittawat Taveekitworachai). I’m interested in shaping model behavior to align with human preferences, domain-specific requirements (e.g., games and medical), task objectives, and cultural values.

Most recently, I'm a research scientist for Typhoon (Thai open models), publishing at top-tier venues, and building evaluation tools that help teams test what their models can—and can't—do.

Exploring approaches to align model behavior with human preferences and domain requirements, including context engineering, prompt engineering, and post-training (SFT/RFT).

Developing open reasoning models like Typhoon T1 and investigating medical reasoning models (MRMs) with novel alignment techniques.

Understanding and mitigating biases and risks. Building evaluation frameworks like BenchING to test structured output following.

Showcasing real-world applications (Typhoon Application Week) and collaborating on projects in healthcare and gaming.

Research Focus

Where fine-tuning, workflow design, and field deployments currently concentrate.

-

Behavior Shaping

Exploring approaches to align model behavior with human preferences and domain requirements, including context engineering, prompt engineering, and post-training (SFT/RFT).

-

Reasoning Models

Developing open reasoning models like Typhoon T1 and investigating medical reasoning models (MRMs) with novel alignment techniques.

-

Evaluation & Safety

Understanding and mitigating biases and risks. Building evaluation frameworks like BenchING to test structured output following.

-

Applied AI

Showcasing real-world applications (Typhoon Application Week) and collaborating on projects in healthcare and gaming.

Professional Highlights

Translating research into community resources, talks, and tooling.

-

Publications & writing

First-author papers at EMNLP 2024 and 2025 on prompting techniques and fine-tuning strategies. Published in IEEE ToG on LLM evaluation frameworks.

-

Talks & workshops

Spoke at FOSSASIA Summit, SuperAI Engineer, and CoG on reasoning models, fine-tuning, and prompt engineering.

-

Open-source tooling

Released Typhoon T1, BenchING evaluation tools, and ChatGPT4PCG competition platform—all open source and used by other teams.

What I publish and share with the community

Regular writing, peer-reviewed research, and talks that turn LLM research into approachable practice.

Fresh experiments and field notes

Short reads on evaluation, prompting techniques, and the practical side of running LLM systems in production.

- External

AI

AIRethinking How Medical AI Reasons: Introducing the Typhoon–SiData+ Ranked-List Medical Reasoning Model

A collaborative research project exploring whether small models can outperform frontier models—like Gemini 2.5 Pro—when trained to produce ranked lists that better reflect real clinical reasoning.

- External

AI

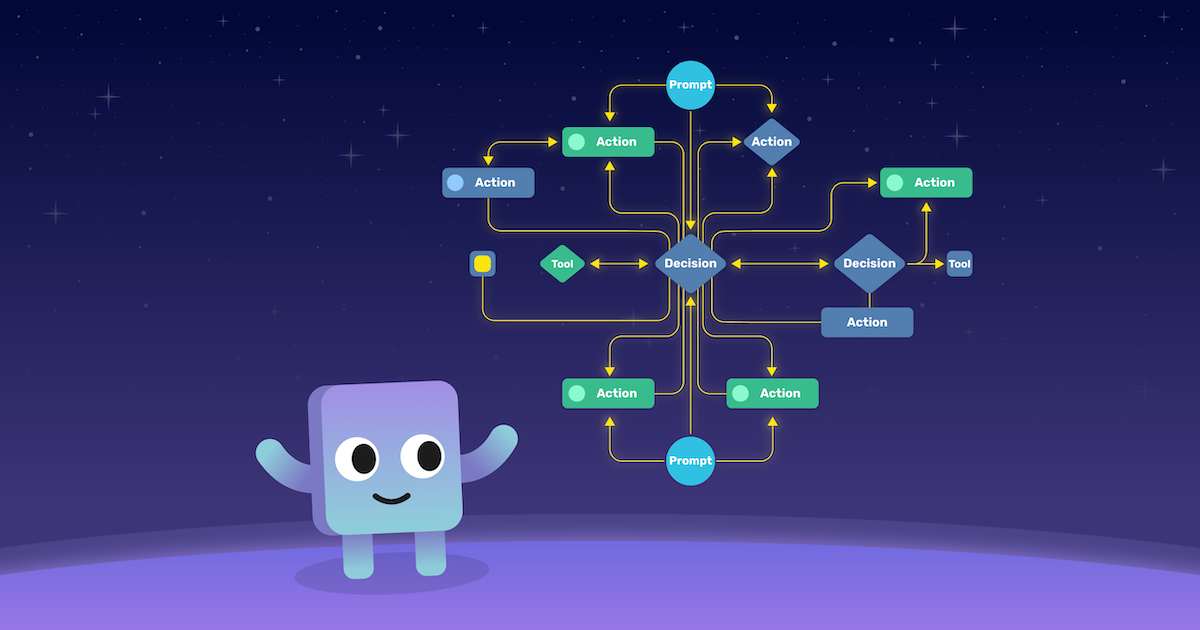

AIMastering Agentic Workflows - 20 Principles to Build Smarter AI Systems

In recent years, large language models (LLMs) have evolved beyond text-based chatbots into agents capable of executing tools—functions that let them gather new information, interact with external systems, or even take actions that affect the real world.